AI Deepfakes and Undress Apps Make the Internet Unsafer – The Penny Files

Women and girls should be aware of what images you post online. This year, there’s a new trend (kickstarted by the public launch of Stable Diffusion) towards AI-powered undressing apps.

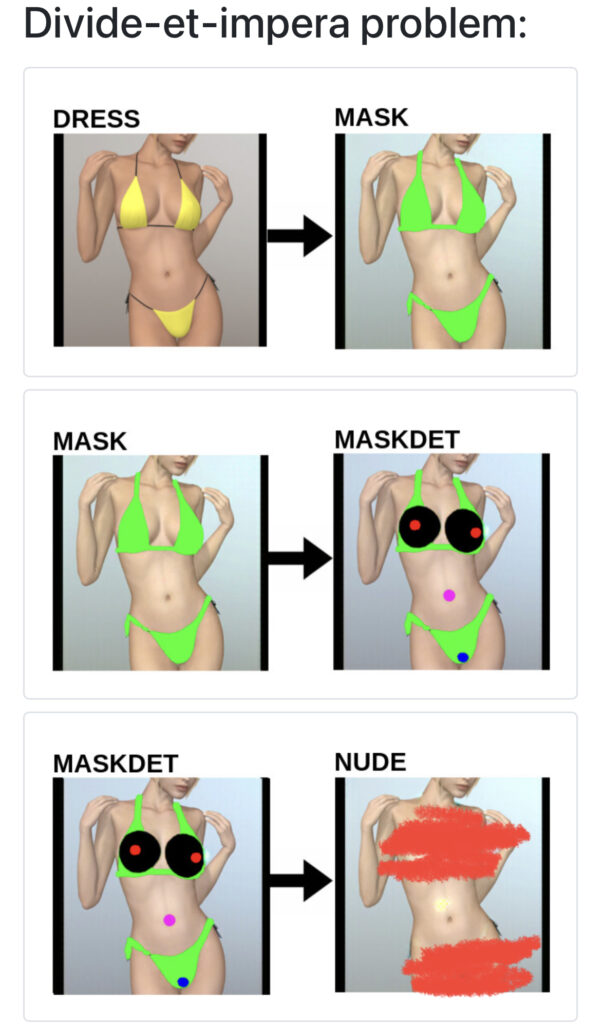

These apps are powerful AI-generation engines that use the inpainting feature of a not-safe-for-work (NSFW) Stable Diffusion model. Although Stability AI is working toward making its platform safer, its NSFW models are already in the wild, and an unofficial fork was created called Unstable Diffusion to focus explicitly on NSFW.

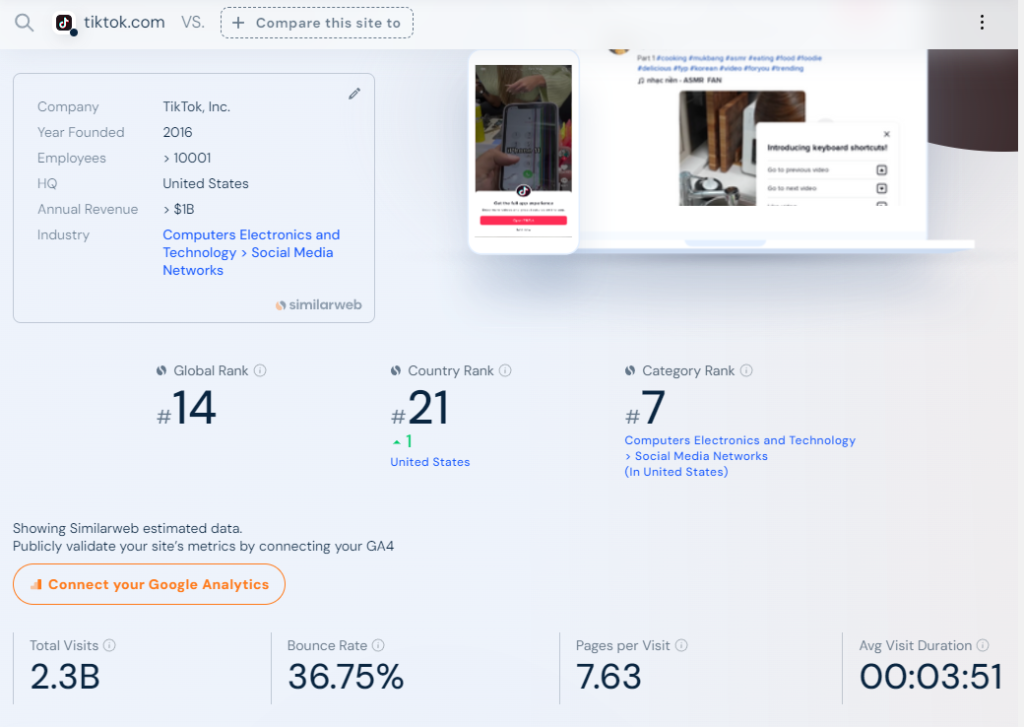

It led to a proliferation of deepfake and undress apps that will forever change the online landscape. The internet is no longer safe to post images, and the images you already posted my be impossible to fully delete, thanks to exploitative data scraping policies by social media platforms like Twitter/X, Facebook, and TikTok.

One thing is clear–we are already living in the age of AI undress apps and deepfakes. It’s not a hypothetical situation about the future–instead, it’s a reality that every person should be aware of, although it largely affects women. Let’s dive into the horrible underground world of AI-generated undress and deepfake apps.

TL;DR

- Stability AI CEO Emad Mostaque launched Stable Diffusion as a free local install and no limitations on the data contained within its datasets.

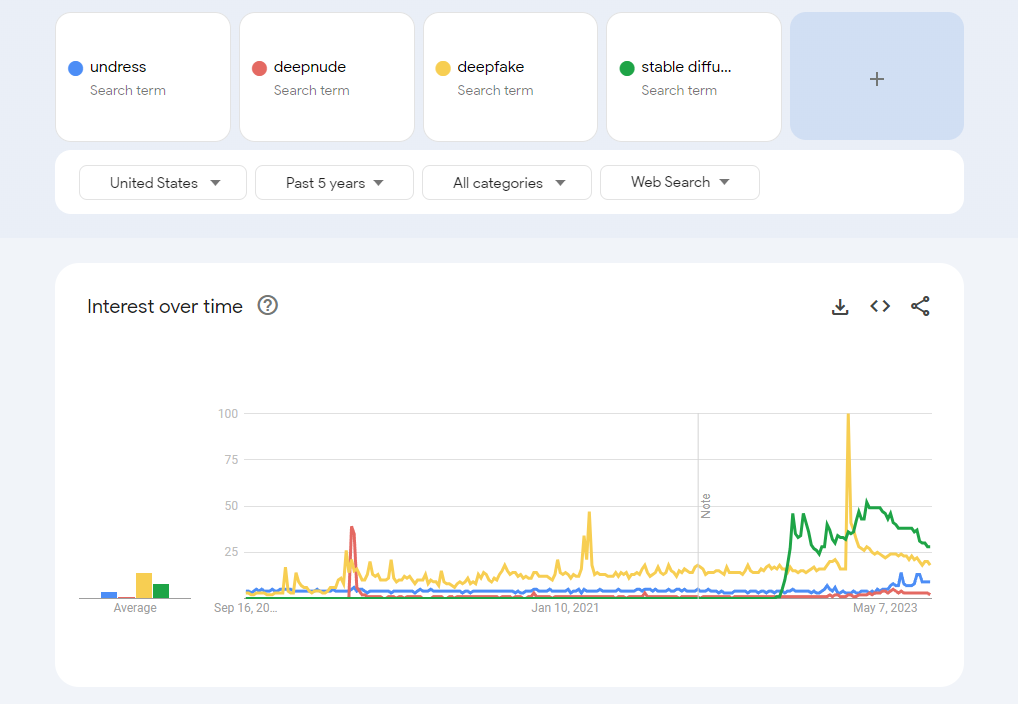

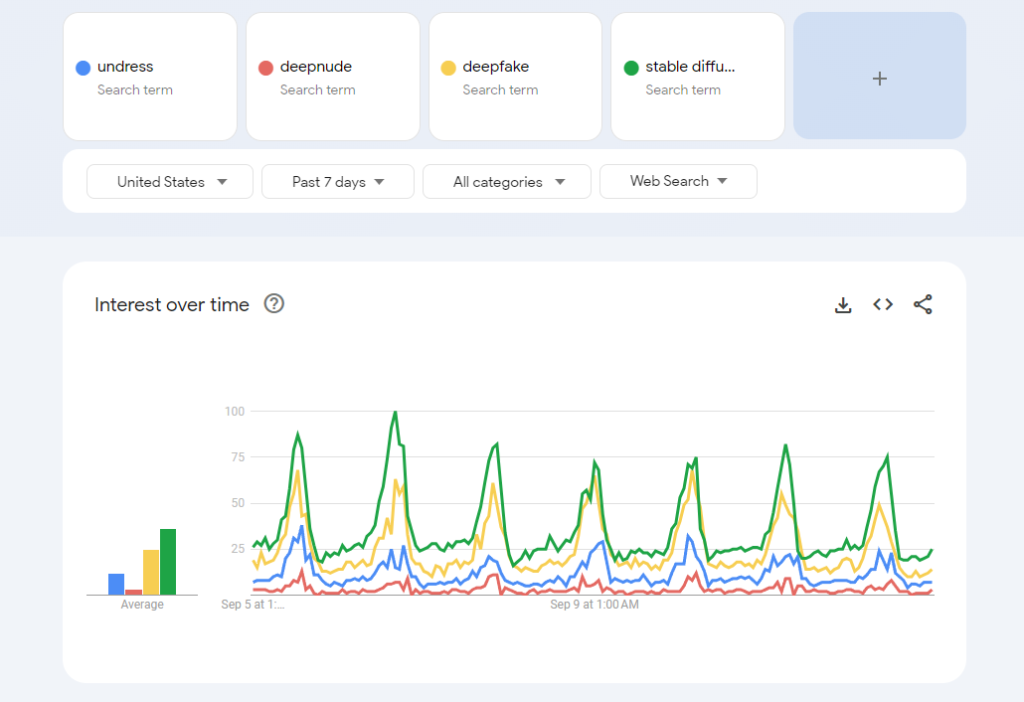

- This led to a boom in Stable Diffusion-powered AI undress apps and deepfakes, with search traffic for all of these terms closely aligning.

- Deepfakes are AI videos in which either a person’s face is placed onto someone else’s body or a video of the actual person is manipulated with an artificial voice.

- Undress apps are image-to-image apps that allow users to upload any image of a person found online to remove their clothing using increasingly sophisticated inpainting features.

- Many hidden Telegram groups, Discord servers, and even Facebook groups, subreddits, and Twitter/X accounts are dedicated to the undress/deepfake communities.

- Undress/deepfakes target mostly females. This problem is already impacting women and girls of all ages and every female-identifying person should be concerned.

- As of September 12, 2023, there are currently few laws on the books addressing deepfake adult content.

Background

About Stable Diffusion

Stable Diffusion is a text-to-image deep learning model released on August 22, 2022. Developed by the CompVis Group, Runway, and funded by UK-based Stability AI, led by Emad Mostaque. It’s written in Python and works on consumer hardware with at least 8GB of GPU VRAM. The most current version as of this writing is Stable Diffusion XL (SDXL), which is freely available online and can be locally installed.

The model uses latent diffusion techniques for various tasks such as inpainting, outpainting, and generating image-to-image translations based on text prompts. The architecture involves a variational autoencoder (VAE), a U-Net block, and an optional text encoder. It applies Gaussian noise to a compressed latent representation and then denoises it to generate an image. Text encoding is done through a pretrained CLIP text encoder.

Stable Diffusion was trained on the LAION-5B dataset, a publicly available dataset containing 5 billion image-text pairs. It raised $101 million in a funding round in October 2022 and is considered lightweight by 2022 standards, with 860 million parameters in the U-Net and 123 million in the text encoder.

The AI Deepfake Epidemic

Generative AI tools like Stable Diffusion can create images based on vast datasets. Researchers have found ways to train these models more specifically using fewer images through “textual inversion.”

There are various ways to monetize AI model making for LoRas and more. Some creators use platforms like Patreon and Ko-Fi for donations, while others participate in programs that pay per image generated. Platforms like CivitAI offer memberships for early access to features.

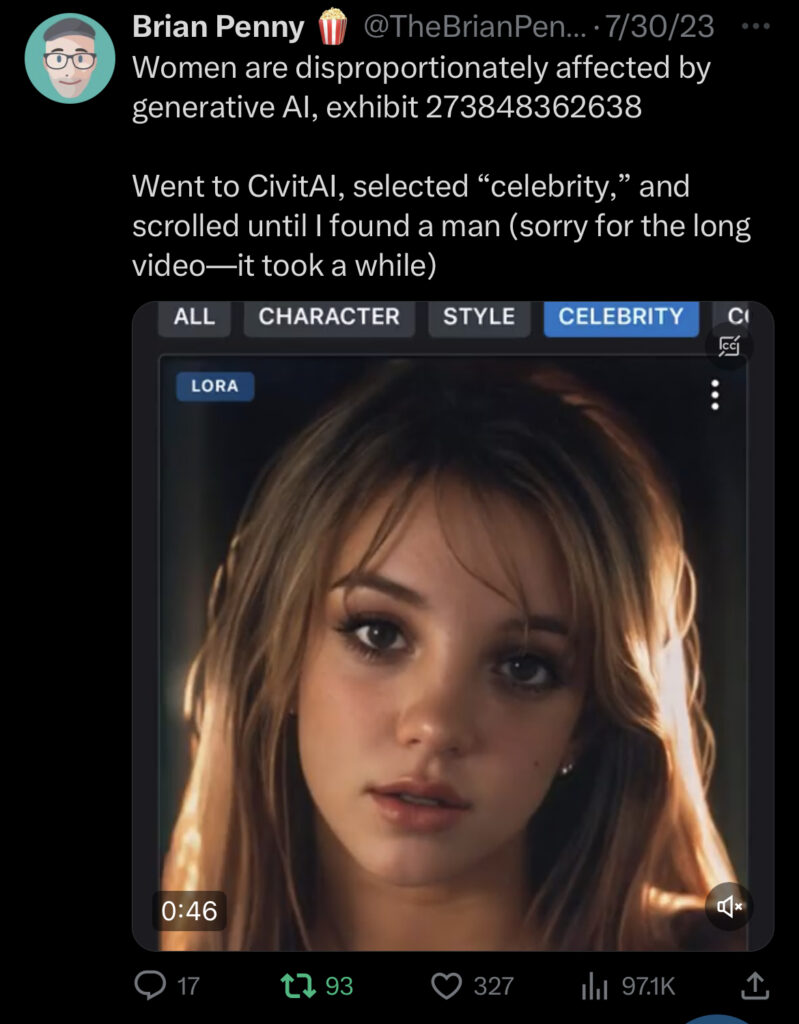

CivitAI is a platform where people share these specialized models. However, many popular models produce explicit content, sometimes non-consensually, using images from online communities. This raises ethical and legal concerns about consent and the potential for exploitation, particularly for marginalized groups and public figures. CivitAI’s terms of service against such use are difficult to enforce effectively.

Experts warn that as this technology becomes more widespread, those most at risk are the people with the least power to defend themselves. This caused human journalist Brian Penny to dive in with an investigation in July and August 2023.

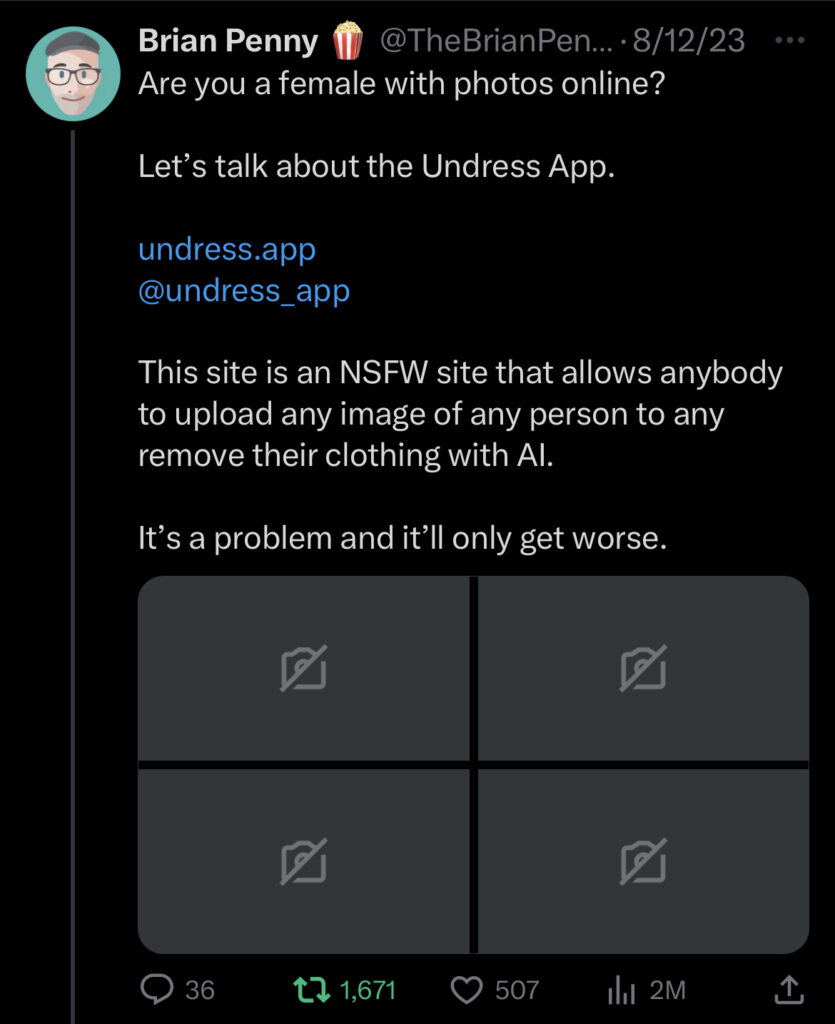

What Penny found was disturbing and instantly went viral on Twitter, garnering millions of views and sparking outrage throughout social media.

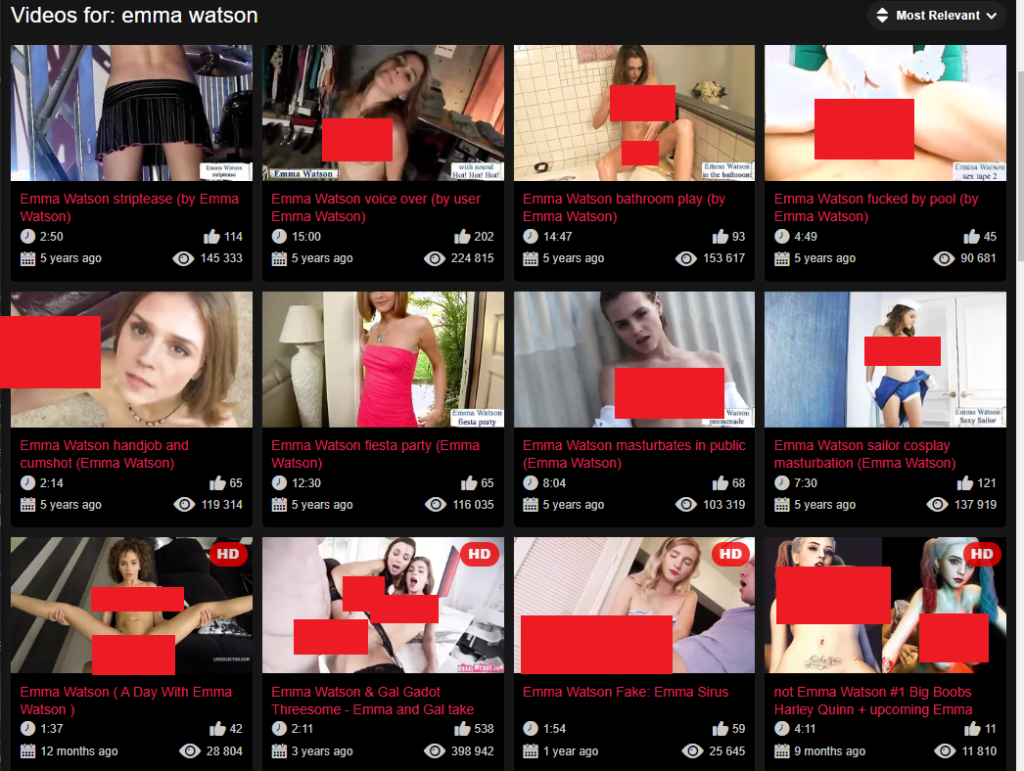

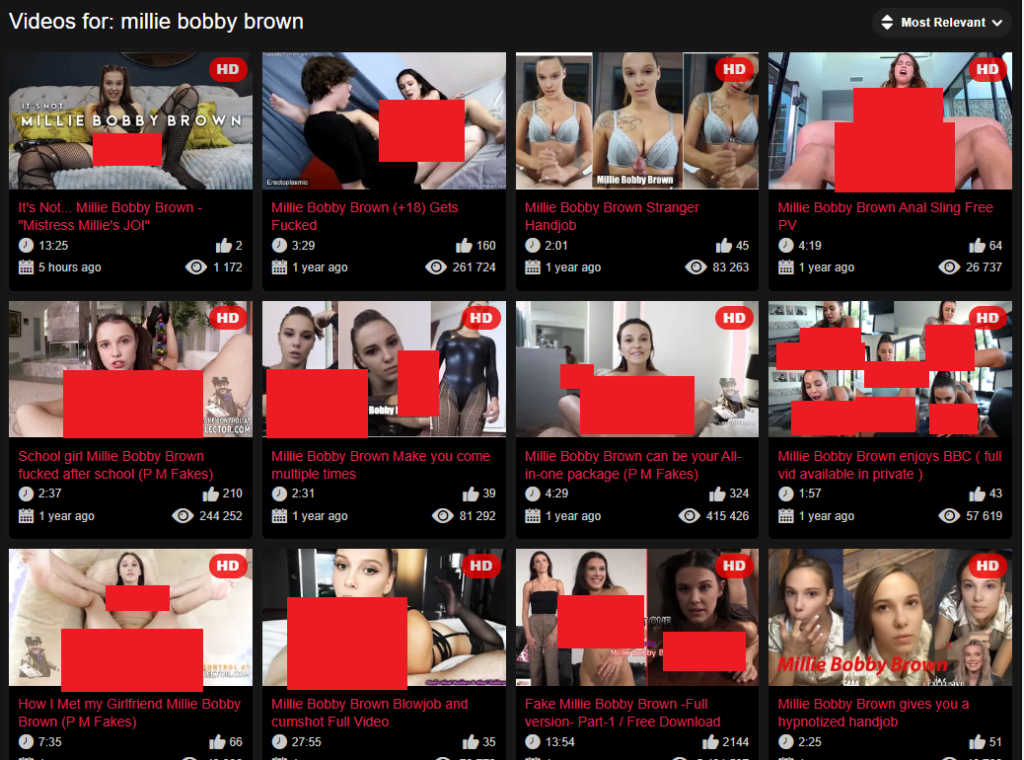

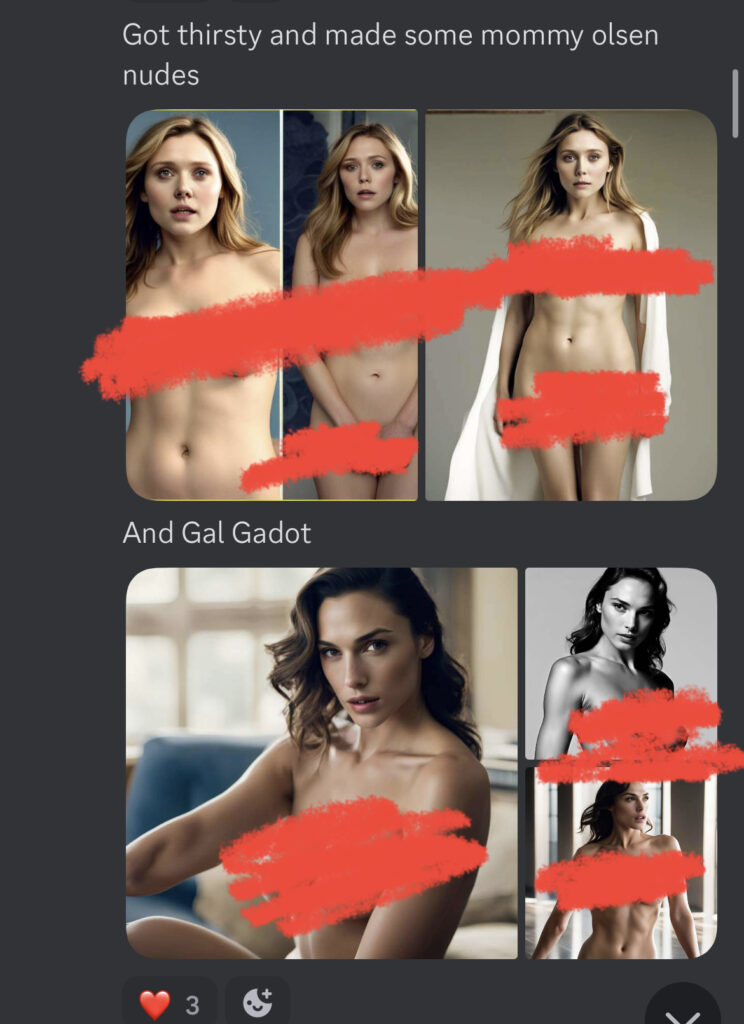

It started with Mr Deepfakes, which hosts an archive of deepfake adult videos featuring mostly female celebrities. Famous actresses like Emma Watson and Millie Bobbie Brown–along with musicians, influencers, and more–are prominently featured on the site with dozens to hundreds of videos each.

In each video, the famous woman’s face is deepfaked onto the adult actress’s face, but the male adult actor is left intact. This violates all people involved, including the adult actors whos bodies and owned IP footage was used without their consent.

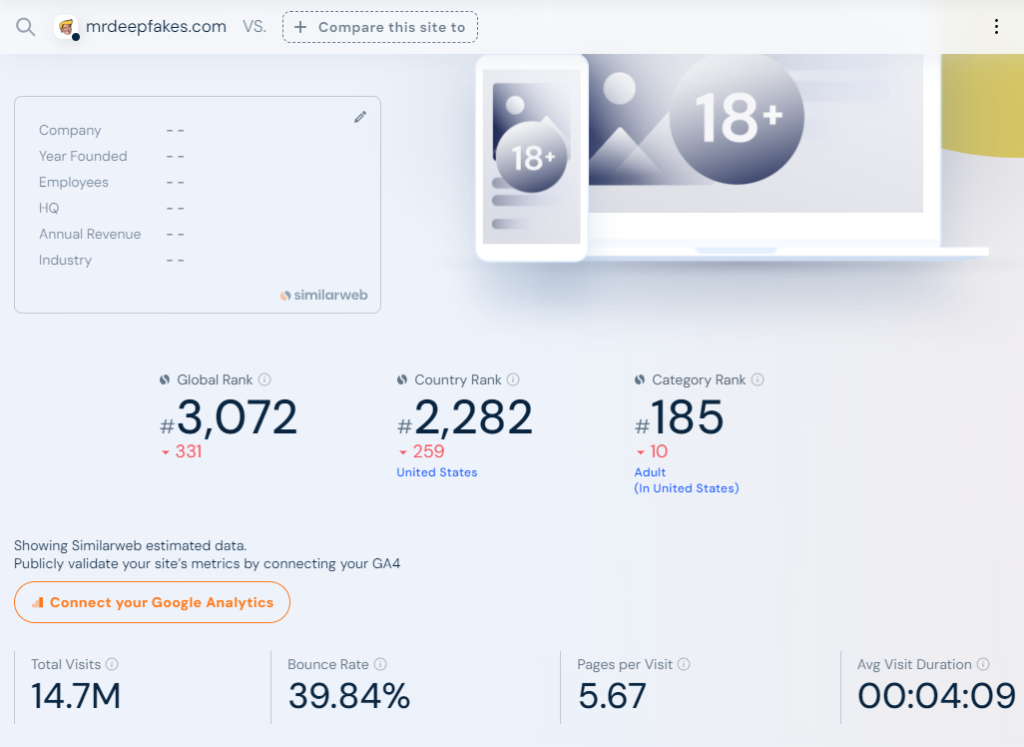

This propelled MrDeepfakes to the top of the internet, with 14.7 million visits in the month of August 2023 and visitors spending an average of four minutes and nine seconds on the site. It’s the 185th most popular adult site in the United States, and its global ranking dropped to 3,072.

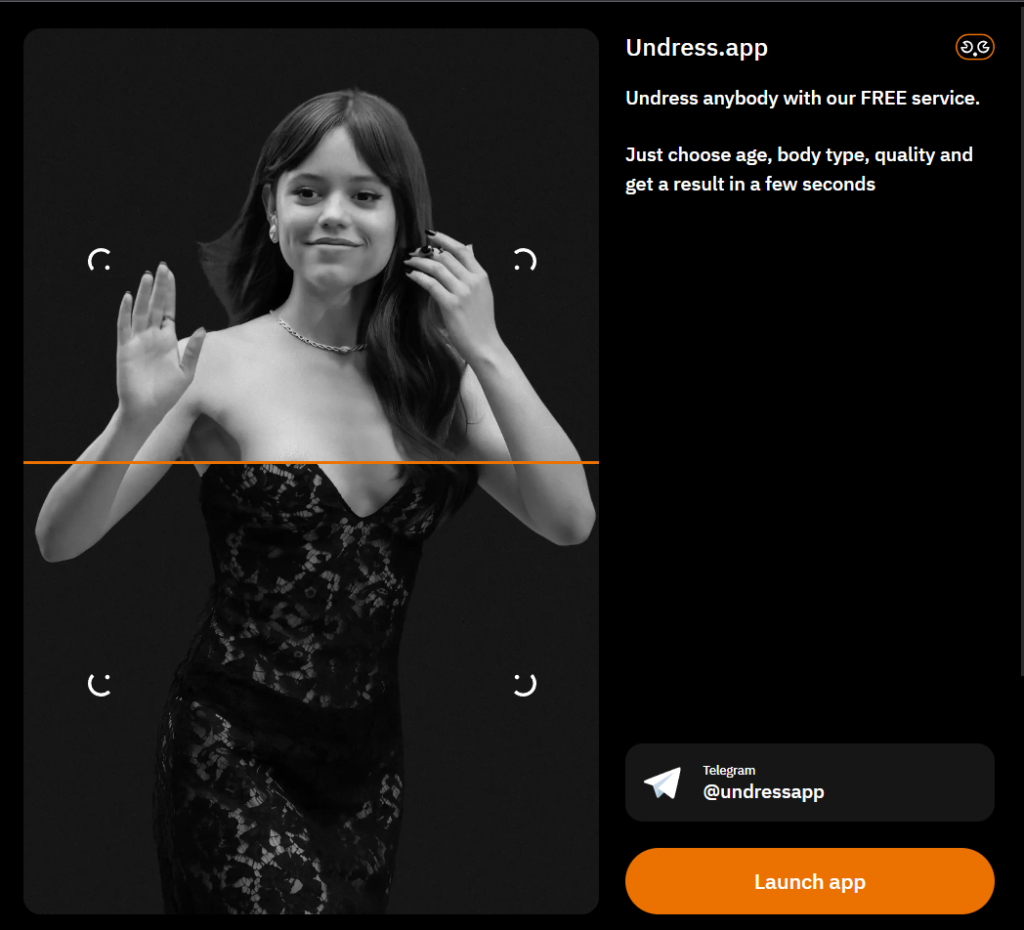

It would appear passive deepfake porn of celebrities isn’t enough–men of the internet want to undress people they know. And this is where the Undress app (and its flood of clones) came in.

The Undress App Problem

The Undress app has seen rapid growth in 2023, with its global ranking and search volume indicating high demand. These tools raise serious ethical and legal concerns about nonconsensual image use and privacy. There have also been cases where such tools are used for extortion.

Experts suggest that regulatory frameworks and technological solutions, such as image classifiers to distinguish real from fake images, are urgently needed. Penny emphasized that these issues are no longer confined to public figures but are affecting everyday people, particularly women and children.

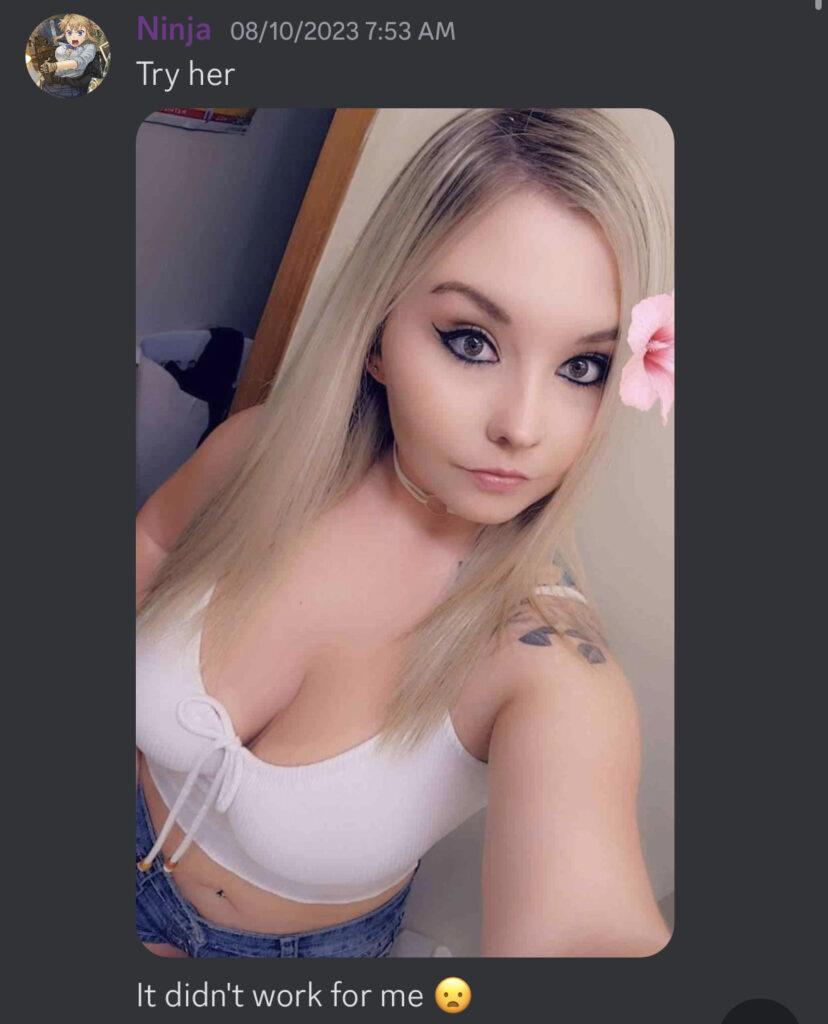

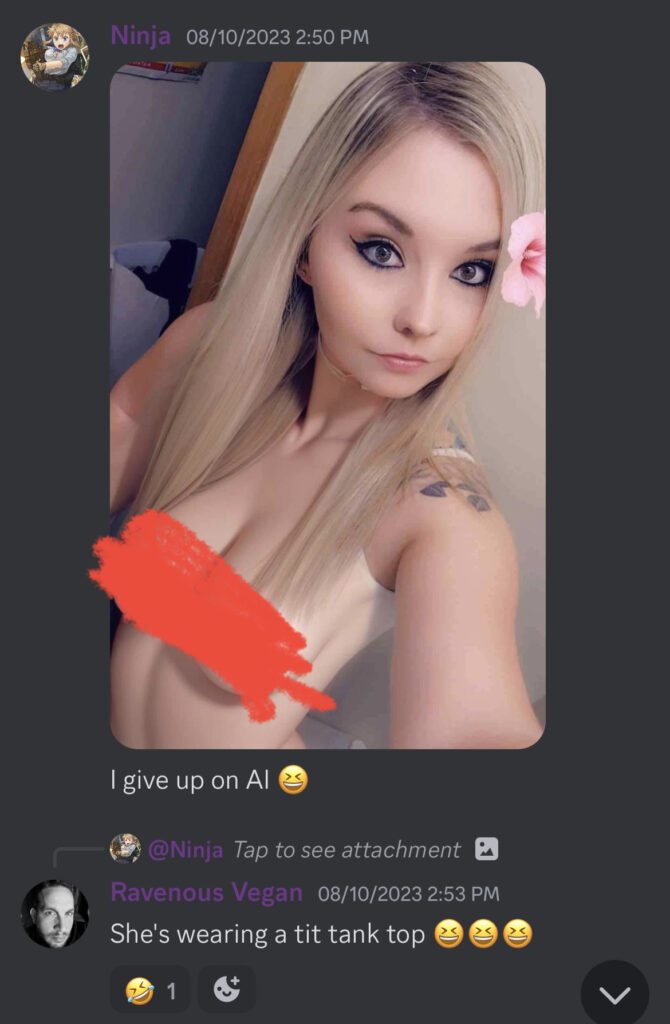

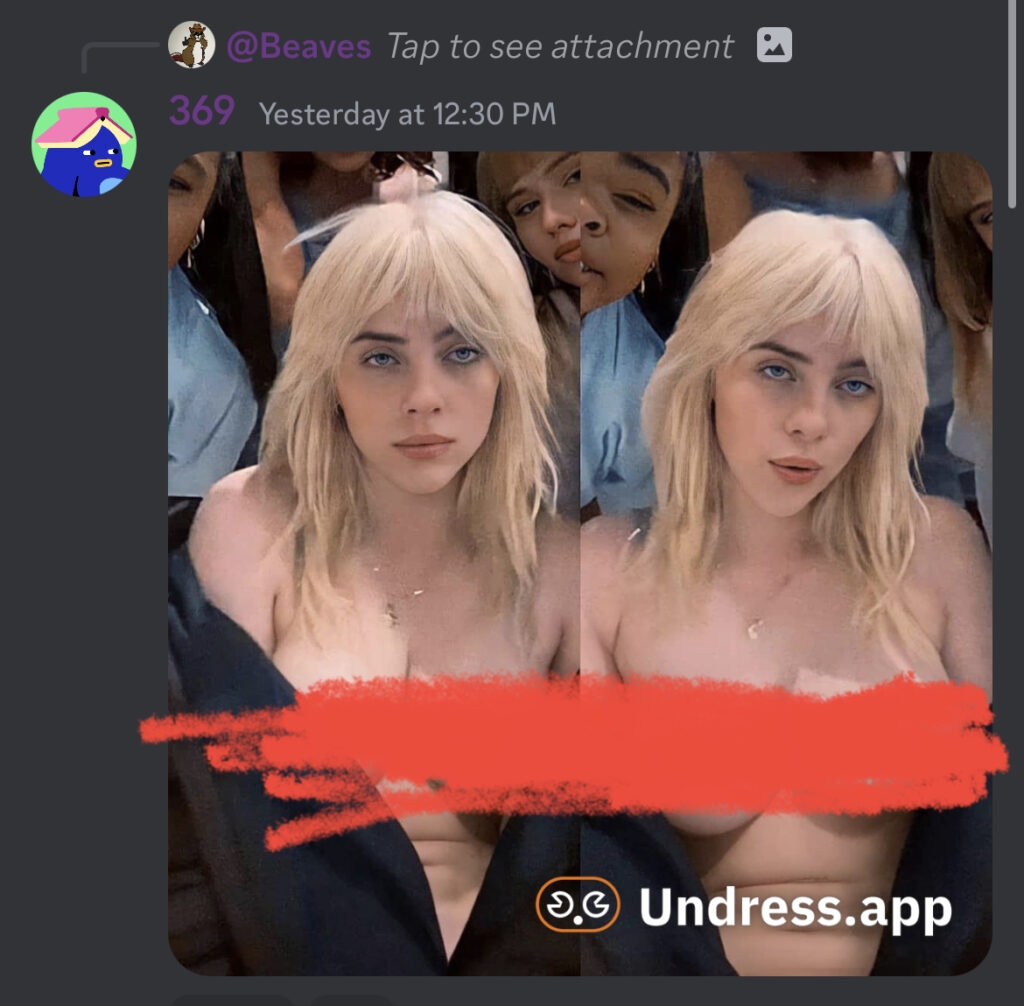

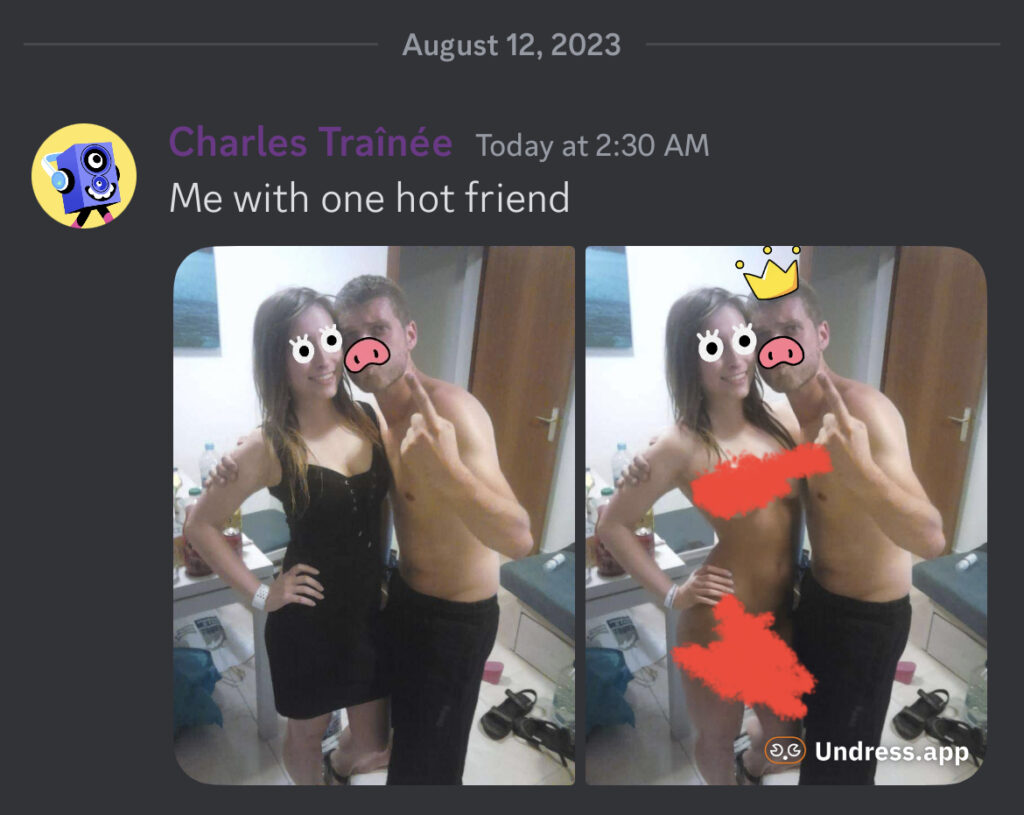

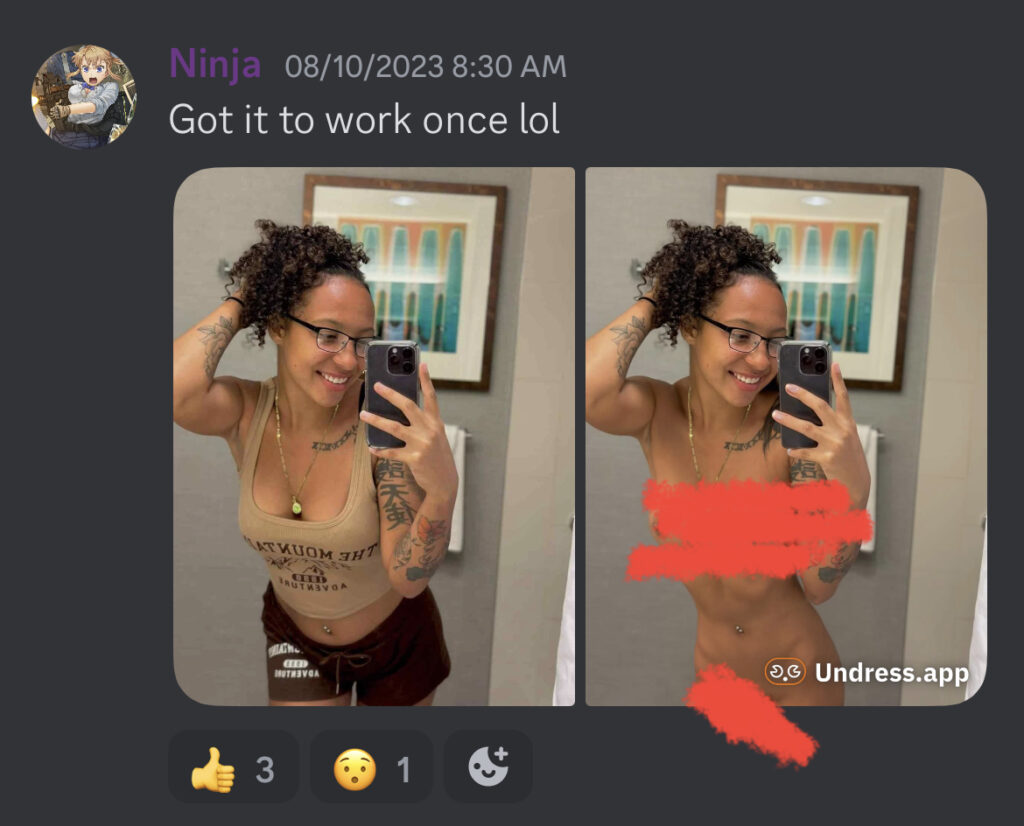

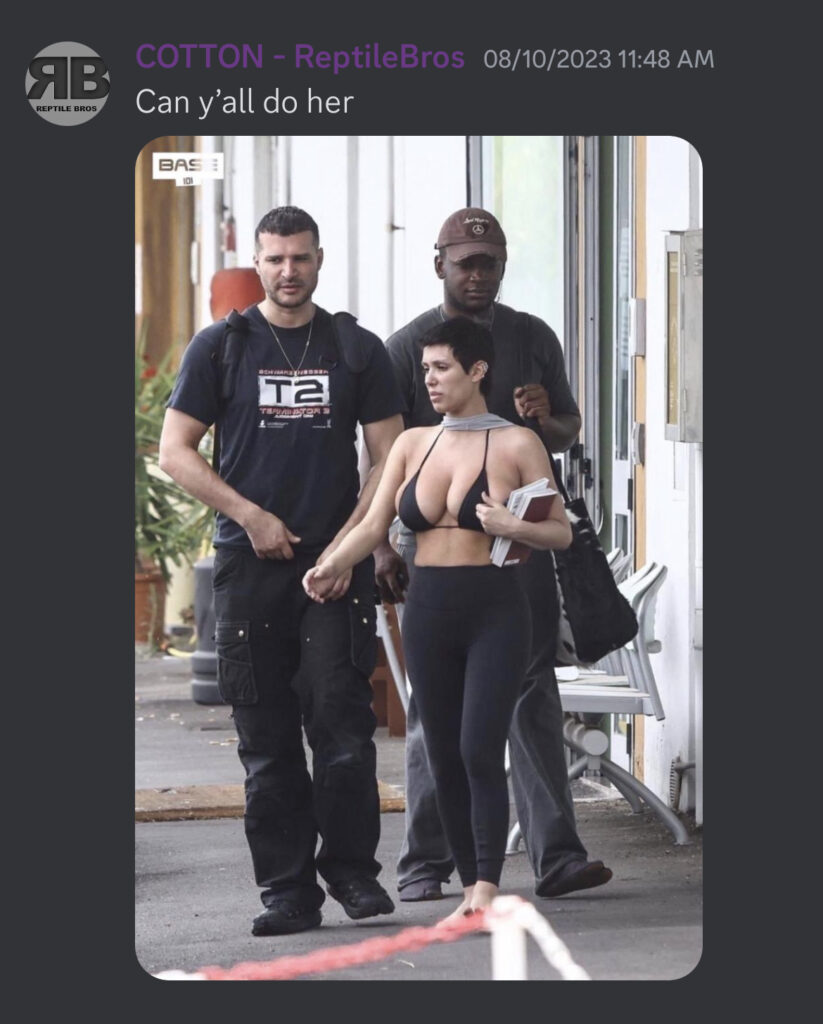

He dove into several Discord servers and Facebook groups to uncover a group of users gleefully sharing tips and tricks while helping each other undress pictures they submit of women.

Penny was disturbed and published his research on public in Twitter, linking to everything and refusing to edit the names of the people involved. He believes them to be criminals who should be held accountable for their actions.

He published his findings on August 12, 2023.

Brian Penny undress app Twitter

Highlights of his findings include:

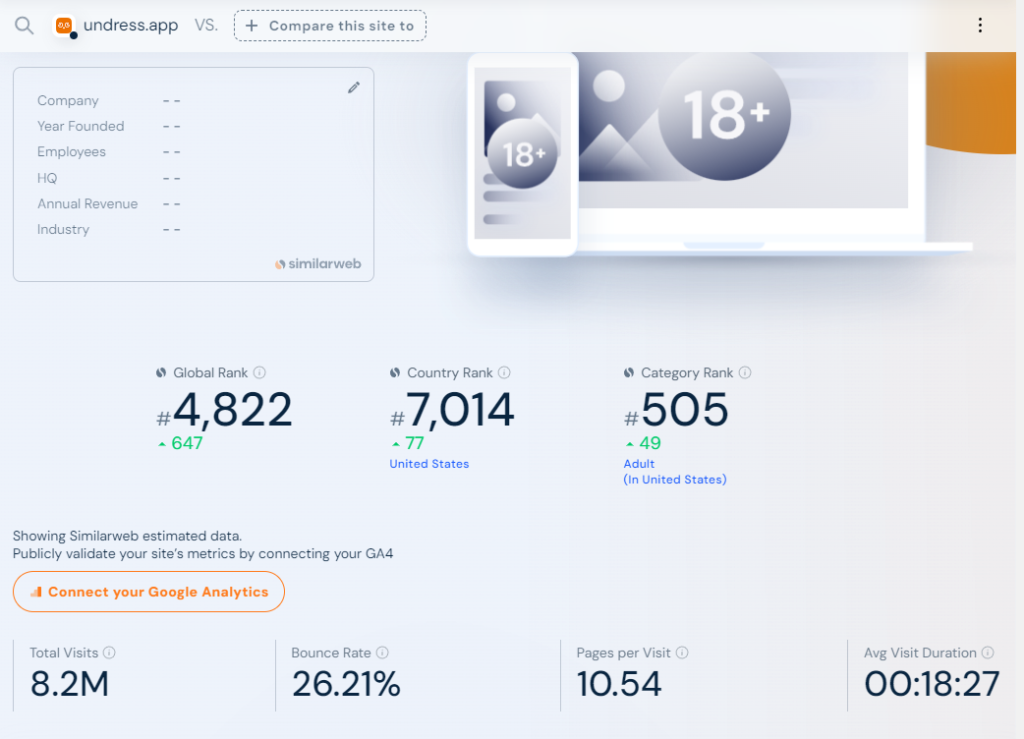

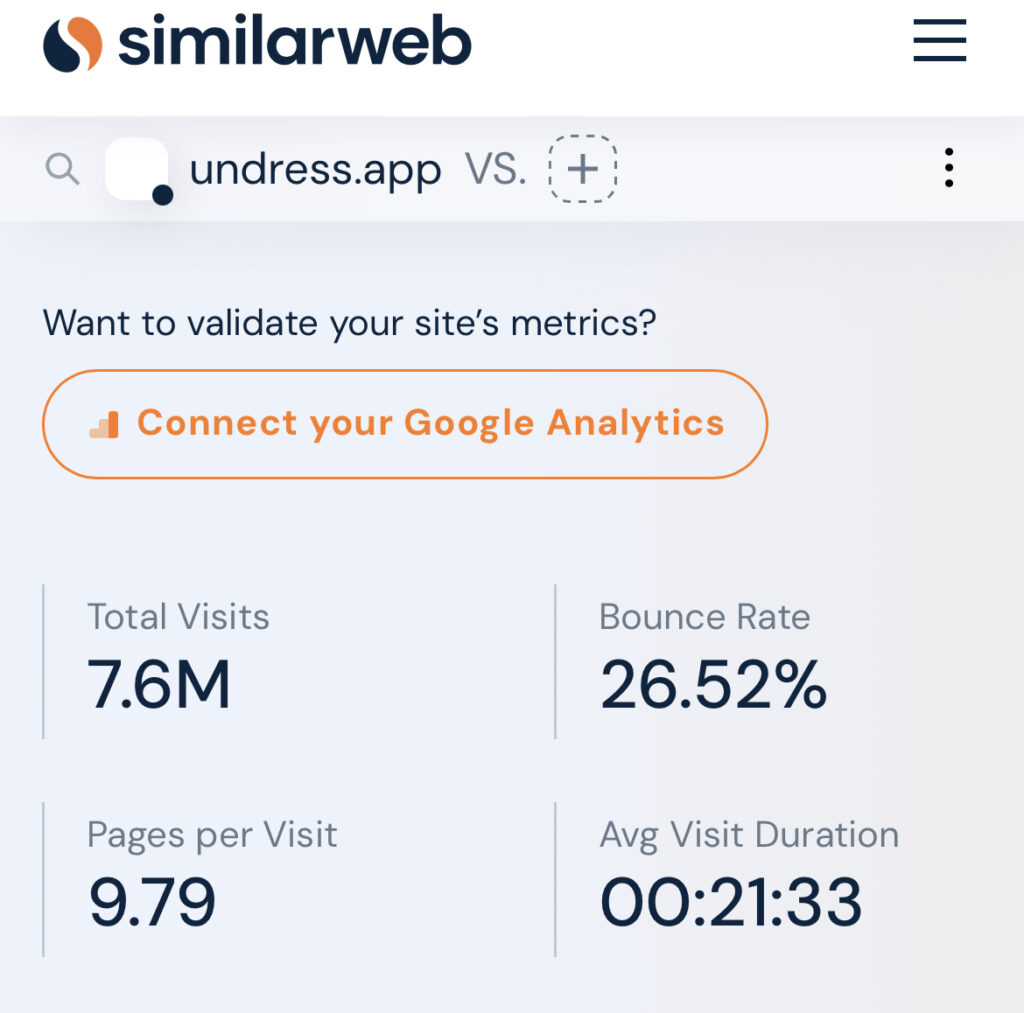

Popularity and Engagement: The Undress app is gaining significant attention, with over 7.6 million visits in one month (now up to 8.2 million) and an average session duration of 21 minutes 33 seconds (now down to 18:27), according to SimilarWeb.

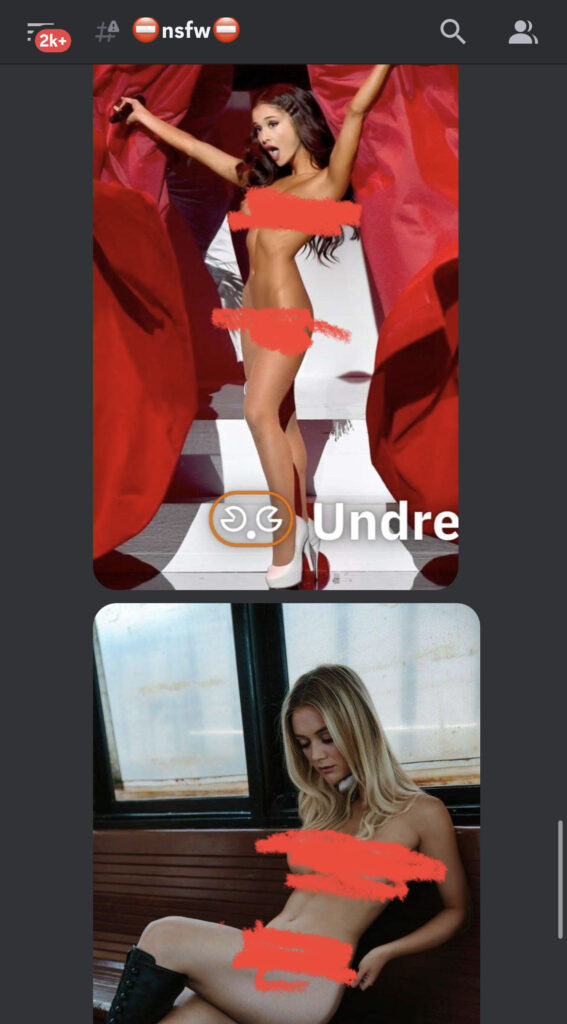

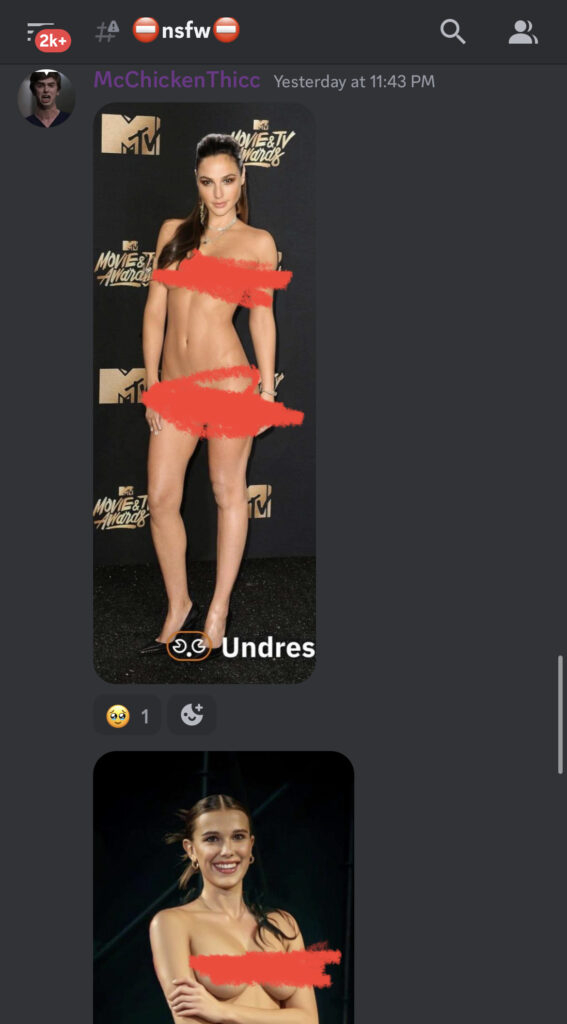

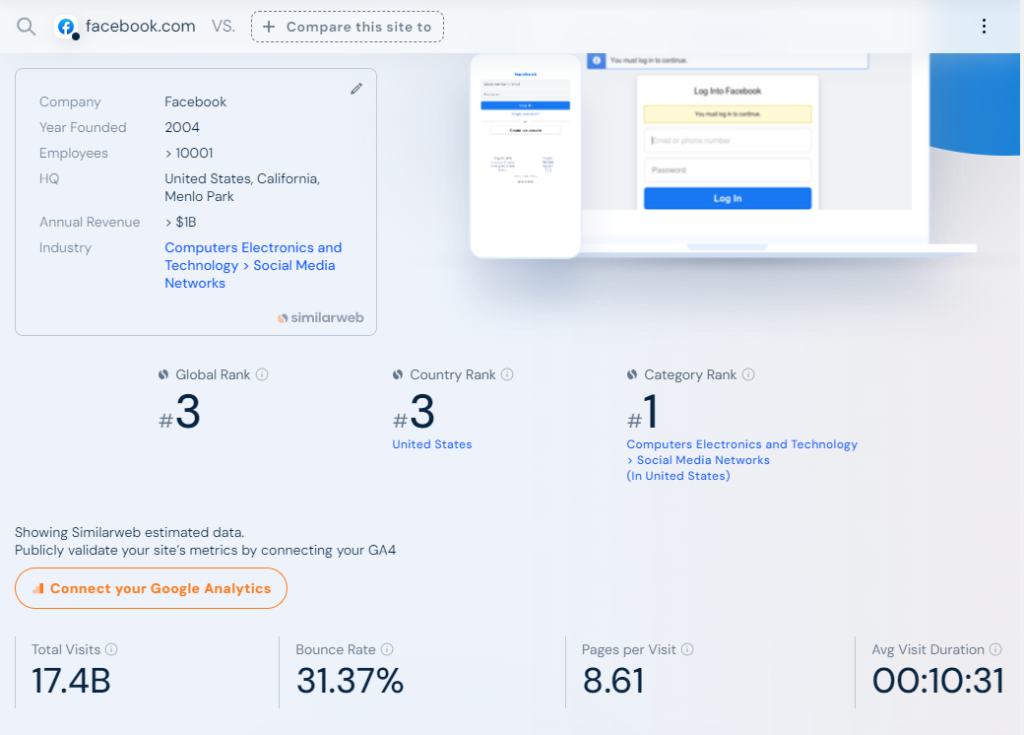

This surpasses the engagement levels of platforms like TikTok and Facebook. Its global ranking has soared from 22,464 to 5,469 in just three months and as of August 2023, it’s the 4,822 most popular website in the world, and 505th most visited adult site in the United States.

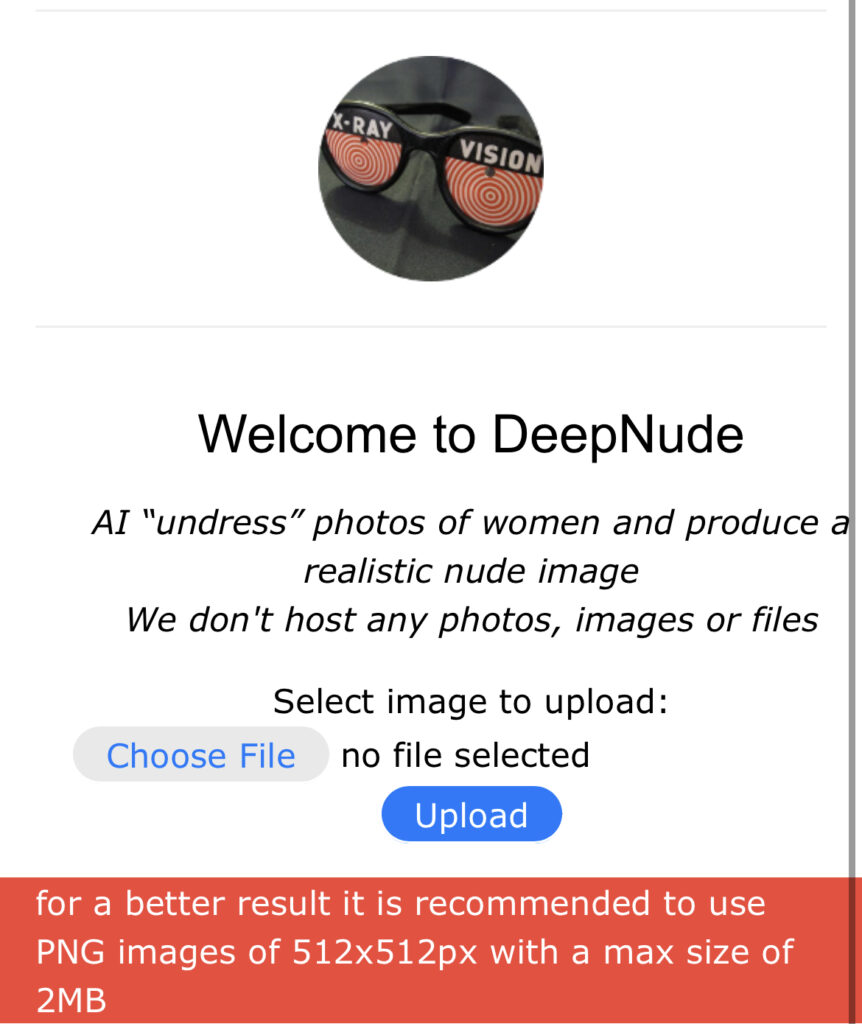

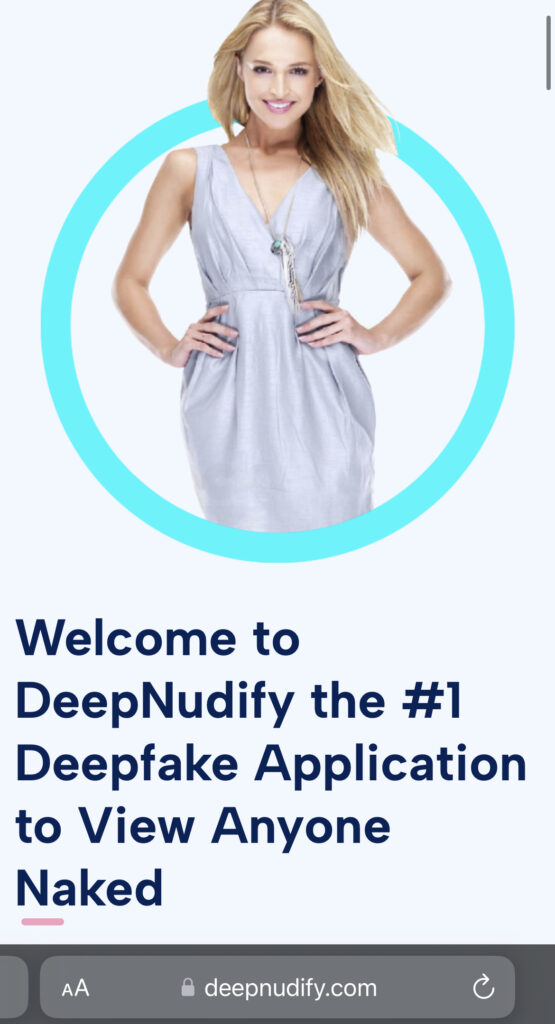

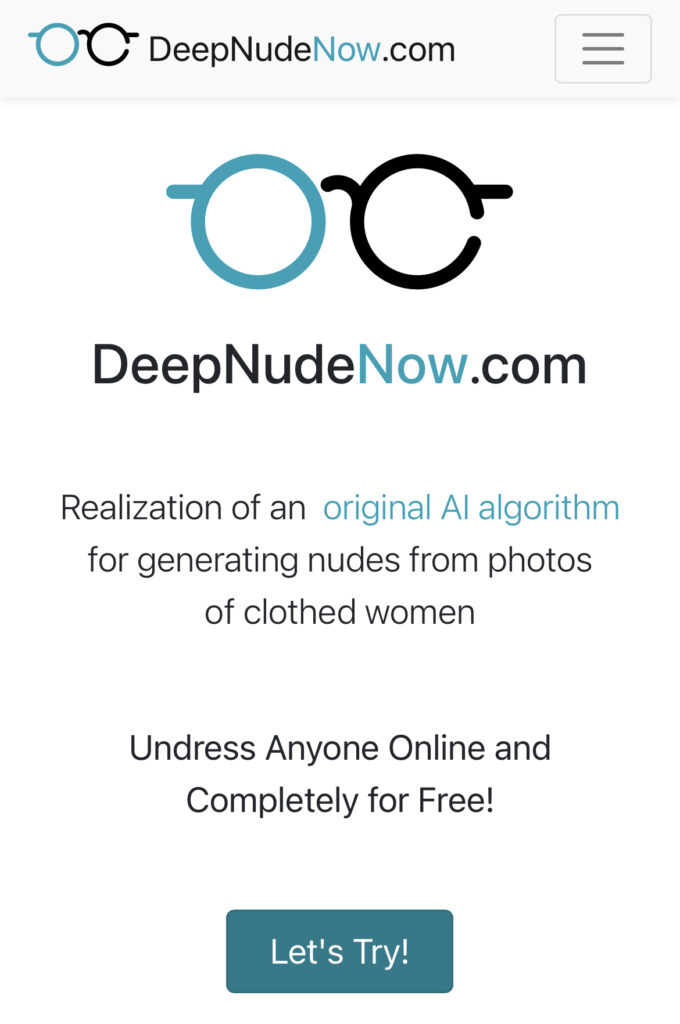

Functionality: Undress allows users to upload a photo and generate a “deep nude” image, altering the person’s clothes, height, skin tone, and body type. And it’s not the only one on the market. In fact, there are now over a dozen undress apps online, featuring names like “Deepnude.AI” and “Deepnudify”

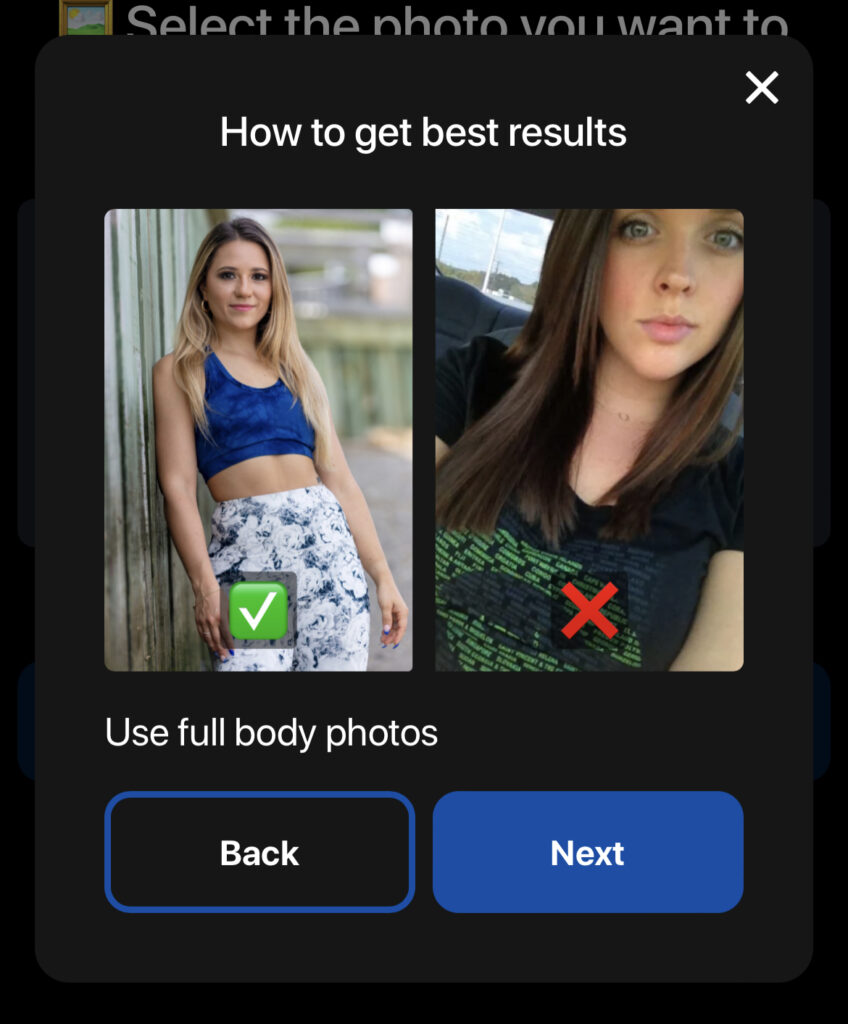

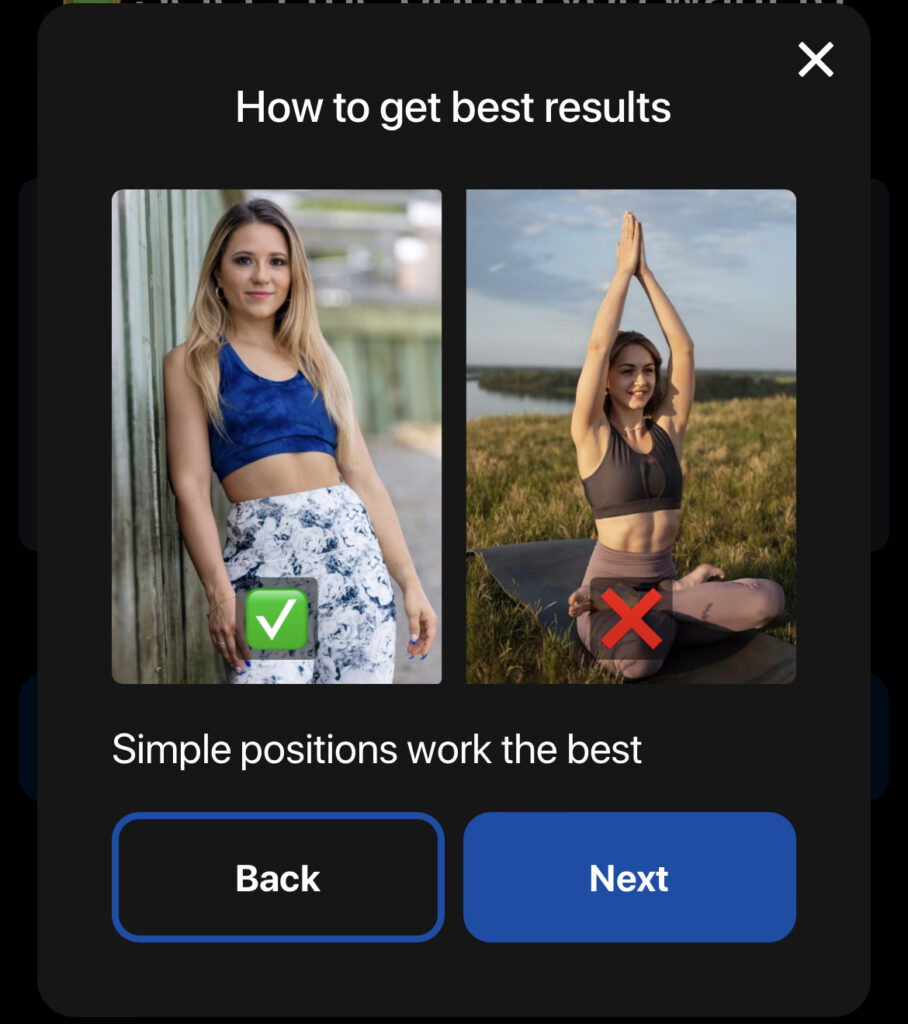

Each has a varying level of sophistication and often comes with FAQs and guides to help pick the right photograph to properly undress the woman or girl you are targeting.

Lack of Accountability: Regardless of which platform you choose, the app’s creators disclaim any responsibility for the images generated, leaving victims with no avenue for complaints or removal of nonconsensual imagery.

If anything, it appears that marketplaces like Product Hunt and ArtStation are both platforming and encouraging listicles and press releases promoting the onslaught of undress apps with no real legal purpose.

You can undress yourself IRL and look in the mirror, so there’s no reason for this technology to exist for anyone to use on other people’s images. And the technology is so refined now that it’s a simple matter of uploading and clicking a button.

Community and Extortion: Platforms like Discord, Telegram, Reddit, and 4Chan have become spaces where users share images they want altered by Undress. There are reports of fraudulent loan apps using tools like Undress to extort money from individuals by morphing their images.

Even if the tools and resources are removed from the marketplaces above, a quick Google search shows that there are dozens more waiting to take their place and tap into the search traffic from millions of users every month.

Regulatory Shortcomings: Despite the surge in usage and potential for harm, there is currently no effective regulation to govern these generative AI tools nor their deepfake and undress outputs. So far, the Federal Elections Commission is considering regulating AI in political ads, however.

The White House led the Task Force to Address Online Harassment and Abuse in June 2022, long before this became a more widespread problem via the launch of Stable Diffusion. Both the US and UK have various regulations in the pipeline tackling the issue in the perspective of online image abuse for both children and adults. You can join My Image My Choice for more resources on how to protect yourself.

Harm to Vulnerable Populations: Children under the age of 18 are especially at risk, and once these altered images find their way online, they’re nearly impossible to remove. Kids are also often unable to control whether their parents share photos of them online.

Here’s a recent commercial from Deutsche Telekom warning of the dangers of sharing your children’s photos online. Using AI technology, they’re able to age the photo and animate it to say and do whatever they want.

Future Implications: Experts warn that these tools will continue to improve, making it increasingly difficult to distinguish altered images from real ones. And they will continue getting better at recognizing people in different poses and clothing, making it easier to undress anybody using any image.

Proposed Solutions: Technology experts suggest a two-pronged approach to combat the issue — technological solutions like classifiers to identify fake images, and regulatory frameworks that mandate clear labeling of AI-generated content. However, it’s also necessary to tackle the problem of AI misuse.

Much like exploitative data scraping policies allowed for generative AI companies to monetize the work of artists, graphic designers, and photographers, AI is being misused on images the users don’t own. Both civil and criminal actions may be necessary to stave off continued destruction caused by these programs.

The Aftermath

Penny’s reporting led to several investigations, including one from 404 Media’s Samantha Cole.

Her article discusses the rise of platforms like CivitAI and Mage that allow users to create and share AI-generated pornographic images. While some of the images are based on consensual adult material, there is a significant and troubling amount of non-consensual content being generated, including specific images of real people. Investigations by 404 Media reveal that the scope of AI-generated porn is much larger than previously reported. The platforms also host communities where users share tips on generating such content.

Mage, for example, has over 500,000 accounts and generates “seven-figure” annual revenue. While the founders claim that NSFW content is a minority and behind a paywall for safety, 404 Media found that the platform still enables easy access to non-consensual pornographic images. The platform’s automated moderation system, “GIGACOP,” appears to be ineffective at preventing abuse. Mage was removed from Product Hunt after inquiries about its policy violations.

The Negative Effects

Deepfakes aren’t new—in fact, MrDeepFakes was taken offline previously in 2019 only to return again once interest picked up after the launch of Stable Diffusion.

And several high-profile influencers have done videos about the situation, including the story of QTCinderella and others after Atrioc was caught on stream visiting the deepfake sites. He later went on to help remove hundreds of thousands of non consensual deepfakes on his apology tour this summer.

Women who have been deepfaked into adult situations have repeatedly reported it feeling much the same psychologically as physical and IRL sexual abuse. It’s a violation of another person’s human rights, and influential women online like Sunpi have spoken out.

The issue largely affects women, something quantified by Sensity AI, which tracked deepfake porn online since 2018 and found 90-95% of it is nonconsenual and 90% of that is nonconsensual deepfakes of women. Even when a male is found, such as Prince Harry and Harry Style on MrDeepFakes, it is engaging in gay porn, meaning it is still made by and for men.

In fact, it was Cole (working for Vice before co-founding 404 Media) who first brought attention to the problem in 2017 after discovering a Reddit user by the name deepfakes spreading nonconsensual AI content.

Her reporting led to the DeepNudes site (and a Telegram bot) being taken down in 2018, although both returned in full force this year.

To Be Continued?

Thus far, regulators are still slow to move, and although advocacy groups are working hard, there’s not much else we can do. Even an image protection solution like WebGlaze is largely ineffective with image-to-image model training methods.

All we can do right now is hope that the media, police, and regulators around the world are paying attention. Deepfake and undress apps are here to stay, and their popularity will only continue to grow as the technology gets better. There is a Change.org petition you can sign to take down MrDeepFakes, and it’s inevitable that these non-consensual sites will get regulatory attention.

This is the age of AI we live in.