CivitAI Facilitates the Use of Stolen Intellectual Property

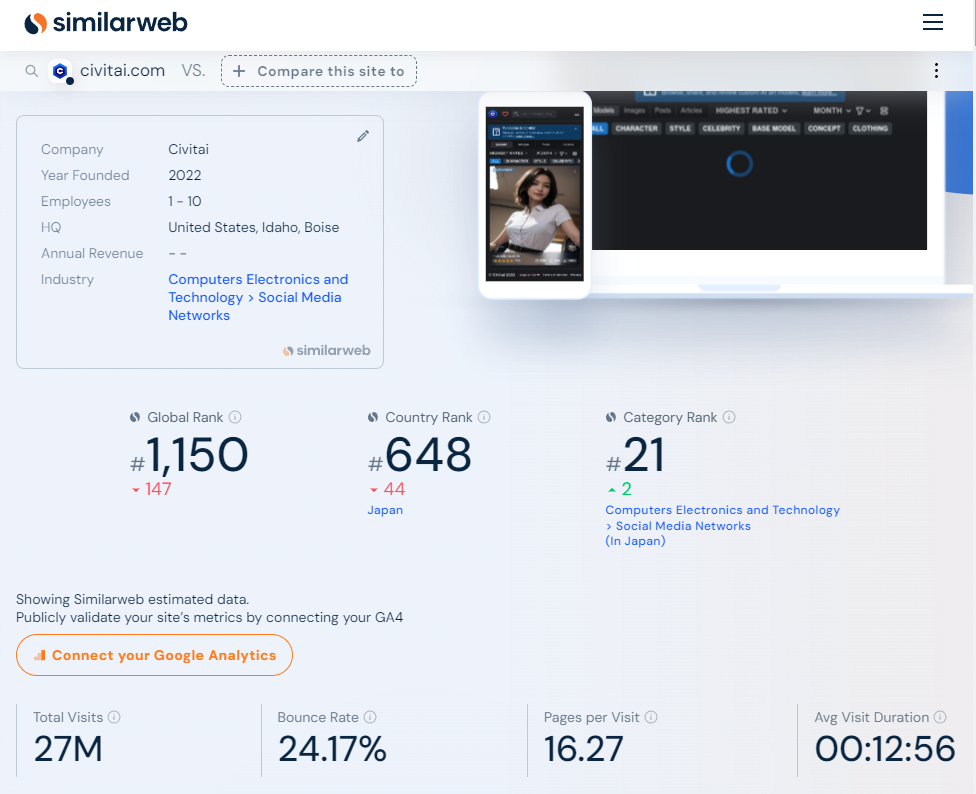

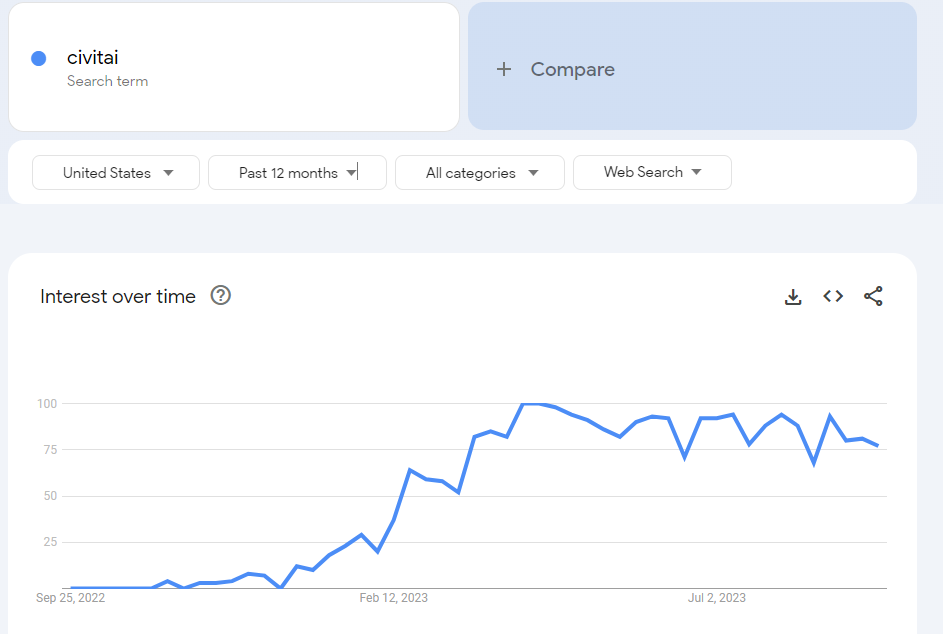

CivitAI came out of nowhere over the past year to become one of the most visited websites on the internet. With 27 million views last month, it is the 21st most popular social media network in Japan and its global rank is 1,150, according to SimilarWeb.

The site gained notoriety as a marketplace where users can upload and download various AI generation models to fine-tune Stable Diffusion. These fine-tunings allow you to create highly overfitted styles, objects, and people that are not trained (or well trained) in the base model.

Of course, this often leads to users uploading models based on images they do not own the rights to, and artists, photographers, and public figures have been forced to contact the company to opt out of having their work and likeness exploited.

And its opt-out policy drew the ire of both artists and its own user base, as artists denounced the difficulty of its opt-out method (and the fact opt-out is necessary at all) while AI art advocates (check out this open letter on Reddit) find it unfair that some models are left with the scarlet letter of being reported for infringement.

Let’s dig into CivitAI to determine how it became so popular and whether it could find itself on the wrong side of Section 230 of the Communications Decency Act of 1996.

TL;DR

- CivitAI is a hotbed of infringing IP that violates both existing trademarks and copyrights.

- It is one of the most popular websites on the internet, drawing 29 million visitors each month.

- Artists continuously contact the platform to opt out of being included without their consent, credit, nor compensation.

- The site also regularly gets backlash for hosting NSFW content, including deepfake pornography and child sexual abuse material (CSAM).

- CivitAI has an opt-out form, but it was called out various times in the past year for hosting content that violates IP rights.

Background

About CivitAI

CivitAI is an online platform launched in November 2022 by Founder & CEO Justin Maier and is currently a team of seven people, according to LinkedIn. It was created for “fine-tuning enthusiasts” to upload, share, and discover custom AI-generated media models. These models are machine learning algorithms trained on specific datasets to create art or media in various forms, such as images, music, videos, and more. Once trained and added to the base model, these models produce content highly influenced by their training data.

As of September 2023, the website exclusively hosts Stable Diffusion image generation models, including checkpoints and low-rank adaptations (LoRAs) for both Stable Diffusion and Stable Diffusion XL.

The platform provides is guided by Terms of Service to ensure user security and respect, although its effectiveness is hotly debated. According to its website, CivitAI aims to be more a platform for accessing AI tools, with the ultimate goal of “democratizing” AI media creation and make AI resources accessible to everyone.

About Stable Diffusion

Stable Diffusion is a text-to-image deep learning model released on August 22, 2022. Developed by the CompVis Group, Runway, and funded by UK-based Stability AI, led by Emad Mostaque. It’s written in Python and works on consumer hardware with at least 8GB of GPU VRAM. The most current version as of this writing is Stable Diffusion XL (SDXL), which is freely available online and can be locally installed.

The model uses latent diffusion techniques for various tasks such as inpainting, outpainting, and generating image-to-image translations based on text prompts. The architecture involves a variational autoencoder (VAE), a U-Net block, and an optional text encoder. It applies Gaussian noise to a compressed latent representation and then denoises it to generate an image. Text encoding is done through a pretrained CLIP text encoder.

Stable Diffusion was trained on the LAION-5B dataset, a publicly available dataset containing 5 billion image-text pairs. It raised $101 million in a funding round in October 2022 and is considered lightweight by 2022 standards, with 860 million parameters in the U-Net and 123 million in the text encoder.

The Uncivilized Scourge of CivitAI

When Midjourney and Stable Diffusion launched in 2022, they created a frenzy of users seeking to refine it so they can generate images of specific people, places, and things. It wasn’t long before these users found a resource in CivitAI.

The platform allows users to upload LoRA models and checkpoints that fine-tune Stable Diffusion so it can produce specific people, objects, and styles from training on a lower volume of images.

Here, users not only create these models but also share tips and tricks on optimizing their creations found through Reddit communities and Discord forums. From altering pelvic poses to adjusting bust sizes, collective wisdom is helping improve the realism and intricacies of these AI-generated images.

One alarming aspect of CivitAI’s rise is its use of images of real people, often taken from social media without their consent. Despite this being against CivitAI’s TOS, the practice continues unabated. Such use amplifies existing concerns about digital sexual exploitation and the objectification of women.

What is LoRA?

Developed by researchers at Tel Aviv University, LoRA functions as a basic template for text-to-image generation. Users can input text descriptions to generate images that match their fantasies, down to very specific details like hair color, poses, and clothing. LoRA has democratized the production of AI-based pornography, where even those with little technical expertise can create explicit content using just a few images.

For comparison, the base models for both Midjourney and Stable Diffusion relied on over five billion images contained within the LAION 5B dataset. Fine-tuning them with a LoRA requires only 5-10 images (although you’ll have better results with 40 or more, especially when training styles over objects/people). It takes approximately 100 steps per image, and it can be done on a consumer-grade Nvidia graphics card.

There are two other ways to go about it: a custom checkpoint and LoRA Beyond Conventional Methods, Other Rank adaptation implementations for Stable Diffusion (LyCORIS). That lengthy, unoriginal word soup is not ours–we at Luddite Pro have much better naming conventions.

Custom Checkpoints require you to replace your base SD or SDXL model with a new checkpoint that has been adapted to include whatever specific object, style, or person. These checkpoints typically only take 4-5 images to work.

Embeddings use textual inversion to teach the AI a new keyword that can be used to invoke a style, person, or object with as few as 3-5 sample images.

LyCORIS combines LoRA with either LoHa (LoRA with Hadamard Product representation) or LoCon (Conventional LoRA) for a more efficient training experience that more deeply adapts the neural network.

Each represents a different way to fine-tune a Stable Diffusion model to replicate a person, object, or style. It didn’t take long to see some big red flags of abuse.

AI Models for IP (and Other) Abuse

These options make it more accessible for the average person to create models, although there’s no mechanism in place to determine if the users owned the images used to train these models. You’ll find everything from famous characters like Pikachu to celebrities like Britney Spears, brands like LEGO, and individual artists like Greg Rutkowski and Stanley “Artgerm” Lau on the marketplace, none of which are officially sanctioned by the IP owners.

And users have repeatedly pointed out that the vast majority of models trained on humans are female. A scroll through celebrities last month, for example, showed a ratio of nearly 100 women found in the “celebrities” section of the site for every one man.

Non-Consensual AI-Generated Porn and CSAM

The intersection of AI and pornography is raising significant ethical concerns, notably around consent, exploitation, and the potential for harm. Non-consensual deepfake pornography already accounts for 96% of all synthetic sexual videos on the internet, with dedicated platforms attracting millions of visitors each month. Of that total, 99% of non-consensual deepfake porn involves women, and a 404 Media investigation found payment processors like Stripe gladly help deepfake sites monetize.

Newer AI models like DALL-E2 and Stable Diffusion have enabled even more disturbing possibilities, pushing the boundaries of ethical implications and societal impact. As CivitAI users report numerous times on forums like GitHub (where Maier is active and responds) that the site is filled with non-consensual NSFW images (and models that make them), often even featuring children under the age of 18.

And that’s just the tip of the iceberg–there are also cultural issues.

Erasing Cultural Heritage

One of the most popular embeddings on the entire platform with over 38,000 downloads and a five-star rating with 97 reviews is an embedding model called “Style Asian Less.”

This model (created by Vhey Preexa, a Serbian American who goes by the user handle “Zovya” on CivitAI) aims to remove Asian influences without diminishing the quality of training, allowing for the creation of images that better represent other cultures. The default SD models were found to overly exaggerate Asian features, due to biases in the initial training data.

Dr. Sasha Luccioni told NBC News AI can easily amplify dominant classes while ignoring underrepresented groups. There’s also a problem of overrepresentation of hypersexual imagery in the datasets that train AI models, especially with niches like Japanese hentai and other waifu imagery.

“There’s a lot of anime websites and hentai,” she told NBC. “Specifically around women and Asian women, there’s a lot of content that’s objectifying them.”

The effort to correct this on CivitAI highlights the larger issue of racial and gender biases in AI systems, a topic that has also been a point of concern for other platforms like DALL-E. While the model tries to correct for cultural biases, there are ethical concerns of treating cultural elements as variables to be adjusted in AI.

Using Stolen IP

Even if the above cultural and ethical problems are resolved, CivitAI still hosts almost entirely stolen content. Each of the most popular models appears to be made without the consent, compensation, nor credit to the original author.

The unauthorized use of copyrighted and trademarked material to train AI models constitutes intellectual property theft and should be legally punishable. Copyright and trademark laws exist to protect the creative and intellectual investments made by authors, artists, and other creators.

When AI models are trained on copyrighted and trademarked works without proper licensing or explicit consent, they effectively repurpose those works into new formats, potentially profiting off someone else’s intellectual labor. This undermines the very essence of copyright and trademark law, which aims to incentivize innovation and creativity by granting exclusive rights to creators for their original works.

Moreover, this form of infringement is not just a one-time offense but continues to propagate as the AI model is deployed and used. Each new output generated by the model carries the DNA of the original copyrighted and/or trademarked material, creating a ripple effect of infringement.

As AI becomes more prevalent in content generation, it’s imperative that the legal system adapts to consider these implications. Failure to hold entities accountable for using copyrighted and trademarked materials in AI training sets would not only dilute the value of the original works but also disincentivize future creative endeavors. Therefore, strict legal measures should be in place to prosecute those who engage in this form of IP theft.

But it’s unclear if CivitAI will run afoul directly of copyright or trademark law due to its status as a user-generated marketplace. Instead, let’s examine if it’s in violation of Section 230.

Is CivitAI Protected by Section 230?

Ron Wyden and Christopher Cox, the co-authors of Section 230 of the Communications Decency Act, discussed the U.S. Supreme Court’s recent decision to leave the law untouched with Fortune this month.

They emphasize that while Section 230 provided much-needed legal clarity for internet platforms regarding user-generated content, the rise of AI is posing new challenges in determining liability for defamatory or illegal content online. The Supreme Court cases Google v. Gonzalez and Twitter v. Taamneh focused on whether these platforms aided terrorism by hosting ISIS videos; the Court ruled 9-0 that the platforms were not liable, pointing out the risks of shifting liability from wrongdoers to general service providers.

CivitAI ties less into AI copyright issues and more into the ongoing debates around modifying Section 230 and the First Amendment implications for content moderation decisions by platforms. As AI like Stable Diffusion and ChatGPT increasingly grows in popularity, new questions of liability and regulation arose that may be far more complex than the issues Section 230 was designed to address.

Section 230 of the Communications Decency Act provides that online platforms like websites, blogs, and social media are generally not liable for content posted by their users.

It’s important to note that Section 230 does not impose specific moderation requirements for platforms to maintain their protected status. Instead, it shields them from liability for user-generated content, while also allowing them the leeway to moderate, remove, or filter such content if they choose to do so. This is under the premise that they are acting in “good faith” to restrict access to material that they consider to be “obscene, lewd, lascivious, filthy, excessively violent, harassing, or otherwise objectionable.”

When it comes to CivitAI, the TOS and public statements from its CEO could provide a layer of defense. However, Section 230 protection does not extend to:

Copyright infringement, copyright claims fall under the Digital Millennium Copyright Act (DMCA) of 1998, and online platforms are expected to adhere to it.

Federal criminal liability–Claims arising from a platform’s own content or actions that are not related to user-generated content. This will be a key point in addressing AI’s harms.

Still, the legal landscape surrounding AI-generated art is complex and largely unsettled. Two primary questions are at the forefront of finding an answer:

Can AI-generated art be copyrighted? The U.S. Copyright Office currently rejects AI as the author of art (thanks to Kristina Kashtanova and Stephen Thaler), stating that copyright protection only applies to works resulting from human intellectual labor. It issued guidance in March 2023 (which a federal court adhered to in August 2023) to ensure everyone is on the same page, but Kashtanova filed another copyright claim in April 2023 that’s still up for debate.

What happens when artists claim their art has been ‘learned from’ without authorization to train AI models? Several ongoing lawsuits–including one from Getty Images against Stability AI and another from a coalition of artists versus Stability, Midjourney, and DeviantArt–are setting the stage for how fair use doctrine will apply to AI-generated works.

Legal experts advise caution for AI developers, businesses, and individual content creators. Developers should ensure they have proper licenses for their training data and create audit trails for AI-generated content. Businesses should vet their AI tools carefully and include legal protections in contracts. Content creators should actively monitor for unauthorized use of their IP and might consider creating their own AI-trained models on lawfully sourced content once the ethics are cleared.

Overall, existing laws are being tested as AI technology advances, with both risks and opportunities emerging for different stakeholders. Until these matters are settled, it appears CivitAI is getting a pass.

How to Move Forward

CivitAI is an online platform that gained significant popularity in the past year for allowing users to upload and download AI generation models for fine-tuning Stable Diffusion.

With 27 million views last month, the site raises concerns about intellectual property infringement and the ethical use of images. Artists, photographers, and public figures have had to request the removal of their work, and the site’s opt-out policy has been criticized as difficult and unfair. CivitAI has also faced backlash for hosting NSFW content, including deepfake pornography and child sexual abuse material.

Despite these controversies, the platform continues to attract a large user base, facilitated by its communities on Reddit and Discord where users share tips and tricks for optimizing AI-generated images. Once the copyright issues are settled, however, and the US government creates the AI rules it’s been working on all year, this AI marketplace is sure to become a legal hotbed.