Stability AI Paints a Pretty Picture of Open-Source That Doesn’t Exist

Emad Mostaque, founder of Stability AI, claims to have initiated the AI boom and says his text-to-image generator, Stable Diffusion, pressured OpenAI to launch ChatGPT. However, according to an investigative report from Forbes, many of his assertions are misleading or false.

Despite presenting himself as an Oxford master’s degree holder and an award-winning hedge fund manager, Mostaque only has a bachelor’s degree, and his hedge fund collapsed after poor performance.

The report reveals he also exaggerated Stability AI’s associations, claiming partnerships with UNESCO, OECD, WHO, and World Bank, which these entities denied. Although Stability AI gained prominence with Stable Diffusion, the source code came from another group–Björn Ommer is the professor who led the research teams at Ludwig Maximilian University of Munich and Heidelberg University.

Making matters worse, Stability AI co-founder Cyrus Hodes claims in a lawsuit filed in July that Mostaque misled him when buying his stake in October 2021 and May 2022 for $100. Based on Stability AI’s most recent funding valuation of $4 billion, that stake would be worth $500 million today.

This is just the tip of the iceberg of the problems Stability AI caused and is facing, as artists, photographers, and designers around the world await the results of two lawsuits for copyright infringement over Stable Diffusion’s usage of copyrighted work without consent, credit, nor compensation.

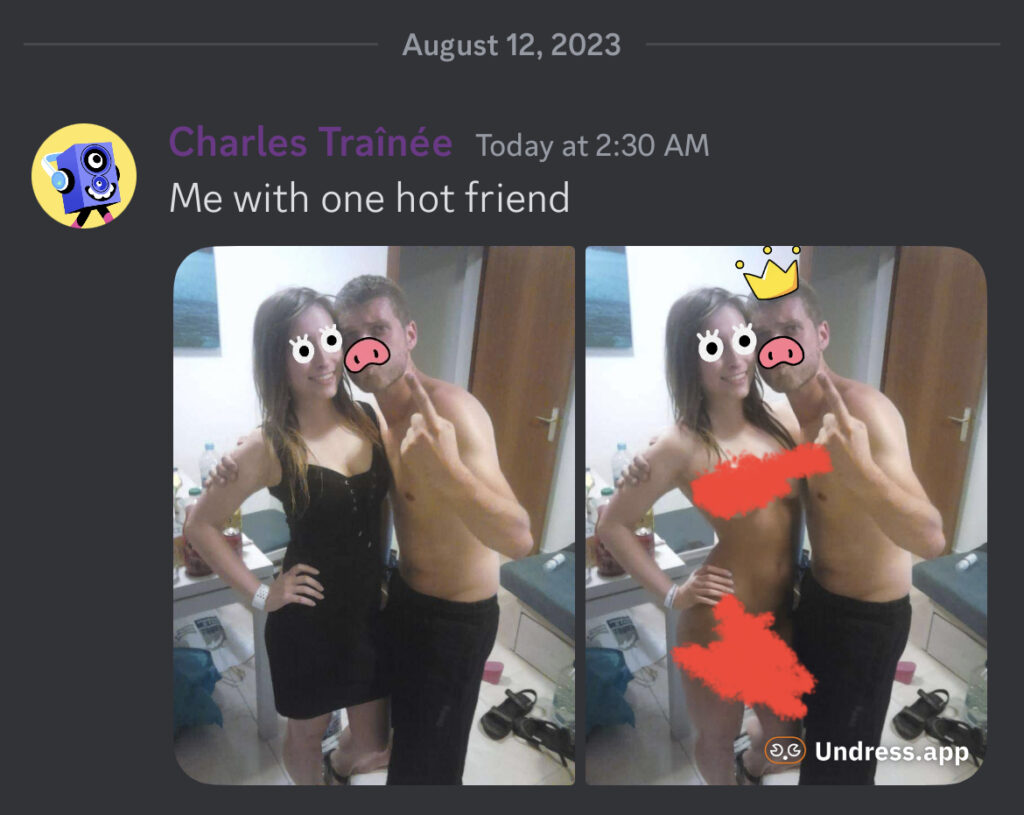

And by releasing its software into the wild, it notoriously became used to create some of the most gruesome, gory, inappropriate, and often illegal images, which are then shared across forums and social media sites online. This includes nonconsensual deepfake porn and adult content related to children.

So, how did a company that accumulated this mountain of problems become a $4 billion business, and what may the future hold for Stability AI and its unstable business model and founder?

Let’s dive in.

TL;DR

- The August 2022 launch of Stable Diffusion fueled the AI-generated image boom, allowing anybody with a powerful enough NVIDIA GPU to run the app locally.

- Stable Diffusion had many features not available in competitors like MidJourney, including inpainting, outpainting, and the ability to train your own model checkpoints and LoRAs.

- The app generated immediate controversy from artists, designers, and photographers for using their work without consent, compensation, or credit.

- Despite being open-source, the model weights in Stable Diffusion are unknown, and tools like Have I Been Trained? cannot keep up with current Stability models.

- Stable Diffusion also spawned the NSFW Unstable Diffusion, and both contribute to the rise of deepfake and undress apps online.

- Stability AI also creates generative AI models for music, text, and more.

- There are currently two lawsuits against Stability AI–one from Getty Images and a class action from a trio of artists.

Background

About Stability AI

Stability AI, founded by Emad Mostaque, came to prominence with its product, Stable Diffusion, an AI text-to-image generator. The company was established with the vision of democratizing AI, making it accessible to a broader audience, and aligns itself with open-source principles. Since its establishment, Stability AI garnered a community of over 200,000 creators, developers, and researchers worldwide. Its mission revolves around providing a foundation to unlock humanity’s potential, emphasizing values like pragmatism, collaboration, ambition, and transparency.

Despite its outward success, Stability AI’s journey has been marked with controversy. There have been claims about Mostaque’s misleading statements regarding his academic and professional credentials, and about the origins and development of Stable Diffusion. While Mostaque took credit for the tool, its source code was reportedly developed by a separate group of researchers. Stability AI’s rapid funding, notably a $101 million investment after Stable Diffusion’s viral success in October 2022, raised eyebrows given the brief timeline. Mostaque claimed significant partnerships and collaborations; however, the veracity of many of these claims remains disputed.

The company also faces scrutiny for the open-source nature of Stable Diffusion, which some users exploited to create inappropriate content. Although Stability AI introduced filters in newer versions to curb the creation of unsafe content, the brand faced challenges in sustaining its AI research prominence. The company’s revenue struggles, legal challenges, and Mostaque’s often unverified claims about his expertise and the company’s partnerships and achievements contribute to a complex and nuanced history.

Stability AI’s Unstable Leader

Despite achieving a $4 billion valuation and receiving significant investments, Stability faces issues a lot of issues,like unpaid wages and tax problems. According to insiders, Mostaque’s success is built on embellishments and false claims, largely based on Stable Diffusion, which went viral last fall.

Stable Diffusion is an AI-powered image generator that utilizes generative models to transform text prompts into visual images. It employs deep learning techniques, specifically neural networks, to analyze textual input and generate corresponding images by drawing from patterns and examples it was trained on.

Because of its virality and ability to separate itself from more popular competitor Midjourney, Emad Mostaque, Stability’s founder, secured $101 million in just six days from top investment firms after the viral success. Stability AI’s investors include:

- Sound Ventures

- Coatue

- Lightspeed Venture Partners

- O’Shaughnessy Asset Management

- Fourth Revolution Capital

- Kadmos Capital

Yet, the quick pace of this investment raises questions about the thoroughness of the due diligence. Mostaque boasts partnerships and collaborations, such as with the African nation Malawi on a significant tech project and Eros Investments. However, the authenticity of these claims remains uncertain. Notably, despite the initial hype, Stability AI’s revenue lags behind its burn rate, suggesting they might need more funds soon. The company also grapples with lawsuits alleging copyright violations in its technology training.

The first lawsuit is from Getty Images, which is seeking an astronomical $1.8 trillion judgement for Stability AI’s improper usage of over 12 million of the company’s owned works. The second is a class action lawsuit from artists Karla Ortiz, Sarah Andersen, and Kelly McKernan that includes DeviantArt and Midjourney as co-defendants.

Each suit accuses the company of using their copyrighted works for a variety of derivative purposes and infringing on both the inputs and outputs, as well as violating right of publicity by allowing artists’ names to be used as styles.

Stable Diffusion’s open-source nature also draws criticism. First, it’s not as open and transparent as advertised, as Mostaque repeatedly shoots down claims on Twitter that tools such as Have I Been Trained are accurate. They only thus far reverse engineered the SD 1.x models released to the public, not the SD 2.x models nor SD XL. This makes it difficult for artists, photographers, and designers to determine whether their work is being used in current production releases. It also makes it more difficult to file legal action against them.

Meanwhile, plans to allow artists to opt out (Spawning AI reported 80 million opt outs within three months of it becoming available in December 2022 and 1.4 billion by May 25) have not yet been implemented. It’s unclear when Stability AI will finally honor the opt-out request queue that has been building for nearly a year, but opt out was not honored for SDXL, the company’s latest release.

IP theft aside, the tool is also widely used to create inappropriate tools called AI undress apps that allow users to remove people’s clothing in one click without their consent.

In response, Stability AI introduced filters in newer versions to prevent users from generating unsafe content. That did little to stop the flood of apps based on its framework that not only allow but celebrate providing fully uncensored NSFW, such as Unstable Diffusion.

The company also faces challenges in AI research. The original developers’ version of Stable Diffusion continues to outperform Stability’s in-house model in terms of popularity. Moreover, StableLM, introduced as a ChatGPT rival, failed to garner much attention, and it is trying again with a Stable LM 3B model this week.

Stable Diffusion XL was released to raise the image quality bar, but at this point, competition from the likes of Adobe Firefly and OpenAI’s Dall-E 3 could mean the company missed its window of opportunity to make a quick buck by appropriating copyrighted and trademarked works without proper licenses. And with its models and software freely available to the public, there’s little incentive for businesses to pay Stability AI for anything.

Still, Mostaque remains optimistic in his public response to the Forbes article, positioning himself as the technical leader of Stability. He claims to have used AI in discovering a unique treatment for autism and boasts of his programming skills, referencing a stint at Metaswitch during a gap year before attending Oxford. According to Mostaque, he self-taught programming and was instrumental in developing vital code.

These claims, like many others Mostaque has made, are difficult to verify but reflect his confidence in his capabilities and the future of Stability AI.

Moving on from Stable Diffusion

Mostaque faces significant scrutiny and criticism, especially after the release of an investigative report by Forbes. While he claims to have been instrumental in initiating the AI boom and pressuring OpenAI to launch ChatGPT with his product, Stable Diffusion, the report exposes numerous discrepancies in his statements.

It’s no surprise that he plans to continue raising money and building his public profile while failing to deliver any profitability to his investors. This is despite profiteering on the backs of all the creatives whose work he stole, from the data scraped to the source code the platform was built on.

It seems nothing Mostaque does is real, and it’s becoming more and more clear he may become the biggest loser of the AI age and a cautionary tale for techbros building in his wake.